Open Data Fabric

Open protocol for decentralized exchange and transformation of data

Website | Repository | Reference Implementation | Original Whitepaper | Chat

Introduction

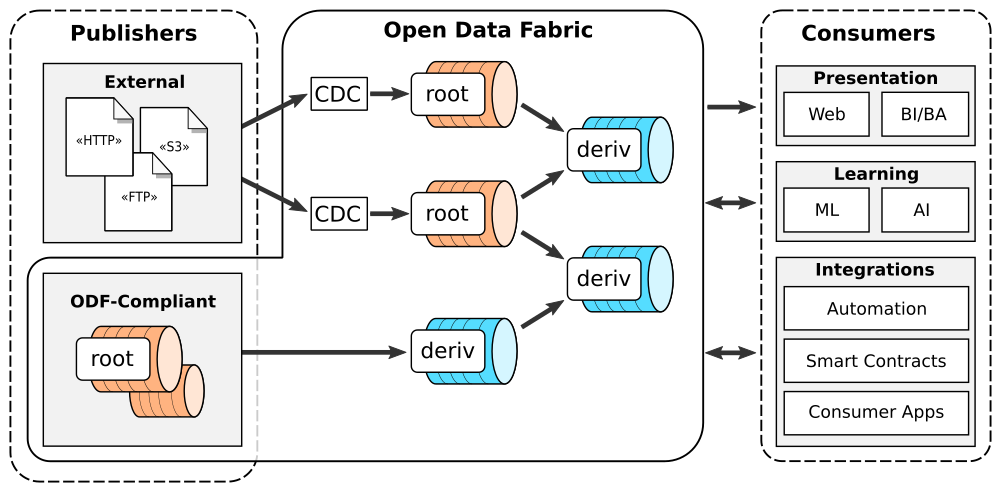

Open Data Fabric (ODF) is an open protocol specification for decentralized exchange and transformation of semi-structured data, that aims to holistically address many shortcomings of the modern data management systems and workflows.

The goal of this specification is to develop a method of data exchange that would:

- Enable worldwide collaboration around data cleaning, enrichment, and derivation

- Create an environment of verifiable trust between participants without the need for a central authority

- Enable high degree of data reuse, making quality data more readily available

- Improve liquidity of data by speeding up the data propagation times from publishers to consumers

- Create a feedback loop between data consumers and publishers, allowing them to collaborate on better data availability, recency, and design

ODF protocol combines four decades of evolution in enterprise analytical data architectures with innovations in Web3 cryptography and trustless networks to create a decentralized data supply chain that can provide timely, high-quality, and verifiable data for data science, AI, smart contracts, and applications.

Quick Summary

At a bird’s eye view, ODF specifies the following aspects of data management, with every subsequent layer building tightly upon previous:

Data Format

- Parquet columnar format is used for efficient storage of raw data

- Event streams (not tables) as logical model of a dataset, with focus on supporting high-frequency real-time data

- All data forms an immutable ledger - streams evolve only by appending new events

- Retractions & corrections are explicit - full history of evolution of data is preserved by default

- Storage agnostic layout based on blob hashes adds support for decentralized content-addressable file systems

Metadata Format

- Metadata format is extensible and records all aspects of dataset lifecycle: from creation and identity, to adding raw data, and to licensing, stewardship, and governance

- Metadata is organized into cryptographic ledger (similar to git history or a blockchain) to keep it tamper-proof

- Block hash of the metadata ledger serves as a stable reference to how dataset looked like at a specific point in time (Merkel root)

- Maintenance operations on metadata include compactions, history pruning, indexing etc.

Identity, Ownership, Accountability

- W3C DIDs are used to uniquely identify datasets on the network - they are an irrevocable part of the metadata chain

- DIDs likewise identify actors: data owners, processors, validators, replicators…

- DID key signing chains are used to prove ownership over datasets in a decentralized network

- Metadata blocks support actor signatures to assign accountability

Data Transfer Protocols

- ODF “nodes” that range from CLI tools to analytical clusters can exchange data using a set of protocols

- “Simple transfer protocol” is provided for maximal interoperability

- “Smart transfer protocol” is provided for secure yet highly efficient direct-to-storage data replication

Processing & query model

- Derivative data (i.e. data created by transforming other data) is a first-class citizen in ODF

- Stateful temporal/stream processing is used as the core primitive in ODF to create derivative data pipelines (Kappa architecture) that are highly autonomous and near-zero maintenance

- All derivative data is traceable, verifiable, and auditable

- No matter how many hands and transformation steps data went through - we could always tell where every bit came from, who processed it, and how

- Verifiable computing techniques are extended to work with temporal processing via:

- Determinism + Reproducibility

- Zero-Knowledge (TEEs, FHE, MPC, Zk proofs)

- Verifiable querying - allows to accompany every query result with a reliable proof that it was executed correctly, even when processing is done by an unreliable 3rd party

- Abstract engine interface spec allows easily adding new engine implementations as plug-ins.

Dataset Discovery

- Global, permissionless, censorship-proof dataset registry is proposed to advertise datasets (blockchain-based)

Privacy & Access Control

- Node-level ReBAC permission management

- Dataset encryption - allows exchanging private data via open non-secure storage systems

- Key exchange system (smart contract) is proposed to facilitate negotiating access to encrypted private data

- Advanced encryption schemes can be used to reveal only part of the full dataset history, enabling e.g. latency-based monetization schemes.

Federated Querying

- Thanks to DIDs and content-addressability, the queries and transformations in ODF can express “what needs to be done” without depending on “where data is located”

- ODF nodes can form ad hoc peer-to-peer networks to:

- Execute queries that touch data located in different organizations

- Continuously run cross-organizational streaming data processing pipelines

- The verifiability scheme is designed to swiftly expose malicious actors.

Introductory materials

- Original Whitepaper (July 2020)

- Kamu Blog: Introducing Open Data Fabric

- Talk: Open Data Fabric for Research Data Management

- PyData Global 2021 Talk: Time: The most misunderstood dimension in data modelling

- Data+AI Summit 2020 Talk: Building a Distributed Collaborative Data Pipeline

More tutorials and articles can be found in kamu-cli documentation.

Current State

The specification is currently in actively evolving and welcomes feedback.

See also our Roadmap for future direction and RFC archive for the record of changes.

Known Implementations

Coordinator implementations:

- kamu-cli - data management tool that serves as the reference implementation.

Engine implementations:

- kamu-engine-spark - engine based on Apache Spark.

- kamu-engine-flink - engine based on Apache Flink.

- kamu-engine-datafusion - engine based on Apache DataFusion.

- kamu-engine-risingwave - engine based on RisingWave.

History

The specification was originally developed by Kamu as part of the kamu-cli data management tool. While developing it, we quickly realized that the very essence of what we’re trying to build - a collaborative open data processing pipeline based on verifiable trust - requires full transparency and openness on our part. We strongly believe in the potential of our ideas to bring data management to the next level, to provide better quality data faster to the people who need it to innovate, fight diseases, build better businesses, and make informed political decisions. Therefore, we saw it as our duty to share these ideas with the community and make the system as inclusive as possible for the existing technologies and future innovations, and work together to build momentum needed to achieve such radical change.

Contributing

If you like what we’re doing - support us by starring the repo, this helps us a lot!

For the list of our community resources and guides on how to contribute start here.